Ai Decodes Speech

February 8, 2023

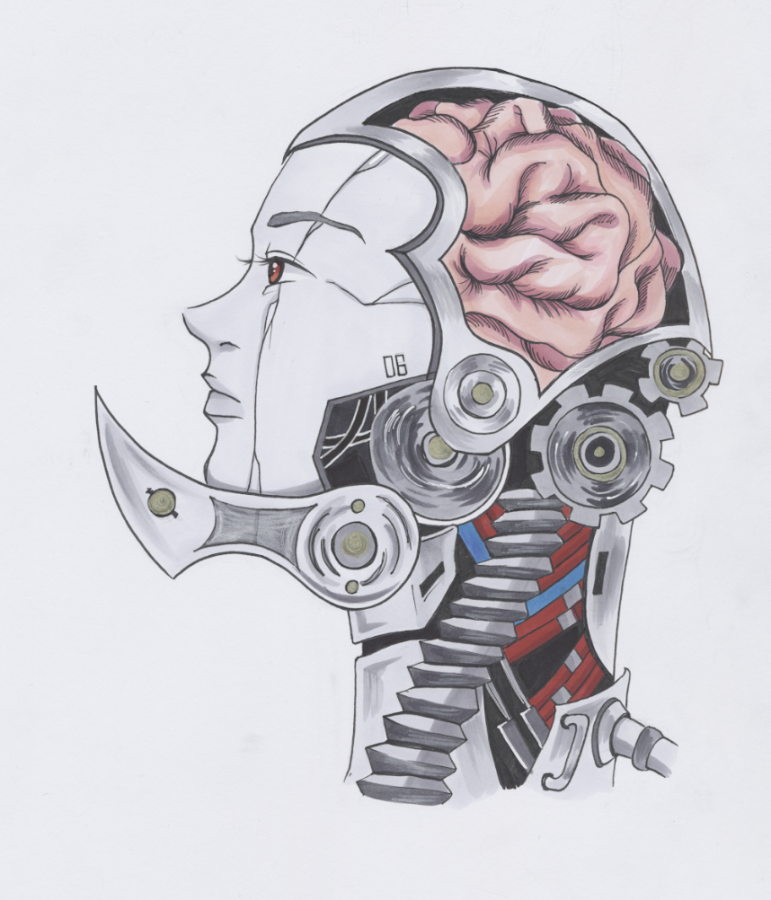

Every year, people worldwide suffer from traumatizing brain injuries causing more than 69 million people to face scarring consequences. Leaving a majority unable to communicate, paralyzed, or physically incapacitated verbally; much of what they would like to express stays trapped inside their brain.

In hopes of improving the lives of those affected, a group of researchers recently developed a technology utilizing the data collected by sensory brain patterns to decode brain recordings directly without surgical procedures. Offering a glimmer of hope, this new technology, developed by Meta AI and founded by Facebook, takes the first steps toward decoding speech using noninvasive surgeries to record brain movement.

In providing an alternative for those facing a neurodegenerative disease (damaged or dead central nervous cells), spinal or brain injury, this new computational model provides a safer solution to those requiring implicational open brain surgery. Instead of needing to embed electrodes in the brain, new AI technology can reconstruct words in human neural networks that once seemed intelligible to fellow listeners.

From the École Normale Supérieure in Paris, Meta AI researcher Remi King and his colleagues wired a computational model to catch both words and sentences after looping speech recordings for 56,000 hours in 53 languages. With this approach, King says it “ could provide a viable path to help patients with communication deficits … without the use of invasive methods.” Known as a language tool; this model is trained to recognize distinctive features of languages on different levels. Psychology instructor Meaghan Roarty also says that “researchers have been using functional magnetic resonance imaging (fMRI) to decode brain activity… mapping out the activity associated with participants viewing all sorts of images from objects to animals to human faces.”

From a fine-grain level, like simple syllables or letters, compared to a general level, that can catch sentences or words, King was able to have both detected. In order to test its accuracy, King and his team used AI with this language tool on four different institutions from the database to study the brain activity of the 169 volunteers.

Tasked to listen to snippets of various stories like Lewis Carroll’s Alice’s Adventures in Wonderland and Ernest Hemingway’s The Old Man and the Sea during each study, the participant’s brain signals were scanned with the help of either magnetoencephalography or electroencephalography. By measuring the magnetic fields created by the electric currents in our brain it has the ability to map brain activity. With the help of magnetoencephalography and electroencephalography (EEG and MEG), they offer the ability to examine brain signals non-invasively.

Observing the fluctuations of both magnetic and electric fields spurred by neural movement, both systems, when used, take up to 1,000 snapshots every second of macroscopic brain activity, with the help of hundreds of sensors placed on the temples. Receiving over 150 hours of recording, Meta AI leveraged EEG and MEG datasets through four open sources. According to Meta Ai, in utilizing MEG, results show that in just three seconds of brain activity, the AI can decode chunks of corresponding speech. With up to 73 percent accuracy when the answer was embedded in the AI’s top 10 guesses given a large pool of 793 words used on a daily basis, shows the growing preciseness of this technology. However, when EEG was utilized, the results varied, and the accuracy declined by 30 percent.

Placing both the EEG and MEG brain recordings into a complex convolutional network, also known as a “brain” model, connections begin to form, allowing them to be studied. While each recording varies among individuals due to the unique makeup of each brain, many differences regarding the timing of neural function and placement of sensors play a significant role when collecting data. In need of complicated engineering, when analyzing the brain, things like a pipeline have to be made to recenter the brain signals on a brain model to receive the best results. Brain decoders, while in the past, were trained on a limited portion of the recording to estimate similar to a small set of speech features like simple vocabulary. These new technological advancements allow us to dream even further.

While using brain activity to decode speech has been a huge aim for both neuroscientists and clinicians, much of the progress has heavily relied on risky brain-recording methods, like stereotactic electroencephalography and electrocorticography. Although they include a much clearer signal than noninvasive ways, surgery is required to do so.

With this upcoming technology, data researchers could attempt to decode what the volunteers had heard in just seconds of brain activity by aligning the AI with the speech sounds from the story recordings. In order to record for the AI to compute and process what people were hearing, the team made the speech sounds line up with the patterning of brain activity.

Given 1,000 possibilities to choose from, AI could predict what the person heard from the information collected. In hopes of improving what Meta AI calls a zero-shot classification, a snippet of brain activity, deciphering what a person heard from a big pool of possibilities. Afterward, the algorithm can choose the most logical word a person could’ve heard. Gearing new exciting steps, AI’s improved ability to learn how to decode varying brain recordings shows the advancement AI has taken for the future of decoding versatile speech. As with the ethics that come with every new innovation Ms. Roarty says it can create, “ privacy concerns. There are also significant concerns over the subjective nature of emotions, which makes emotional AI especially prone to biases.”

However, while taking the right steps, King says this new study is all about the “decoding of speech perception, not production.” All while speech production remains the overall goal for many scientists and researchers, as of now, “we’re quite a long way away.”